You’ve just gotten into the office on a Monday and your CISO has pulled you into a meeting. The board has decided you’re moving your SIEM off of Splunk (Elastic, Rapid7, etc.) to save money and better manage the new APT-6272 out of Elbonia. It must be “AI”, “Data Science” enabled, it must work “at scale” and though you’re not fully able to pay attention through the acidic and painful noises coming from the lower depths of your coffee processing unit you’re pretty sure you’ve heard enough buzzwords to make even the interns roll their eyes. Though you’ve been assured you can “shift left” and it only needs to be an “MVP” to start, you’re quickly running through the mental list of your backlog which seemingly has fallen into a blackhole and obscurity.

Quick, what do we do, where do we start? You’re in luck, because the CISO sent you the Gartner Magic Quadrant, and since you’re moving to a cloud first solution, your first choice is obviously Microsoft – or so the CISO tells you. This scenario is playing out repeatedly in the industry as folks who were not caught up in the rush to move off prem in 2020 (due to a certain global work from home event) are quickly looking to de-risk and change the CAPEX funds to OPEX; especially as hardware and infrastructure renewals come into play for 2025 and beyond.

Assuming dear reader, that you’ve not succumbed to a panic attack, we can move on to how Security Information and Event Management (SIEM) and cloud native solutions can work together to create a “Big Data” solution that can scale to your security budget and detection and response needs. We cover the DevOps focused approach that is realistic for security teams that need to up-level those capabilities as well as covering assumptions and potential pitfalls you may encounter.

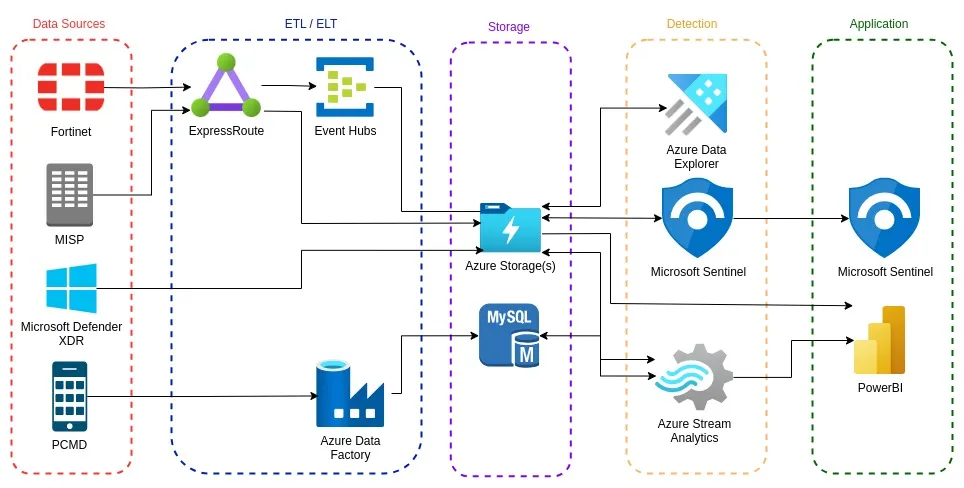

In this 3-part knowledge-share series, we seek to help security leaders and decision makers establish a foundation and framework for a SIEM Modernization (aka: “The Big Data SIEM”) development strategy at the multiple petabyte per day ingestion scale — 90% or more within the Microsoft Azure SLA. Follow-up articles will provide guidance for the same scenario & business use-case (shown below) with the Google Cloud Platform(GCP), Amazon Web Services(AWS) and Oracle Cloud Platform(OCP). We’ll further provide detailed examples of pitfalls, design mistakes, challenges to implementation and ideal team compositions on each layer in the series.

I’m joined in each post by co-author, friend and colleague, Tyson Barber.

The exploration and early design process we’ll go through, as well as their considerations, are based on a framework built with nearly three decades of combined experience in the IT, cloud and security sector of the author and co-author. Opinions on specific technologies, methodologies and the like are our own and do not represent the stance of any organizations we have been affiliated with presently or in the past.

Within this post, we will provide a high-level overview of the core SIEM concepts, where subsequent posts will dive into each architectural layer in medium-level detail. All posts within this series will provide high-to-medium level guidance in support of your journey, and will be written in conversational form for ease of reading. Hyperlinks will be provided for all technologies and references.

Series Scenario

For this scenario, we’ll assume that our security division seeks to transition their SIEM capabilities from on-prem server infrastructure to a new public cloud subscription. This division ingests 3 petabytes of threat, syslog, firewall and other data types each day from more than 100 data sources. Once ingested, our division plans to run both scheduled and ad-hoc queries to aid Detection Engineering, Threat Hunting, SOC and other specialty teams in their daily workload.

Data stored must adhere to PCI DSS compliance standards, which (in the case of our scenario) means that you will need to design an efficient method for storing detection (within context) for 365 days. Finally: Our division’s executive management team has repeatedly expressed an interest in developing and implementing AI technologies, so your ingestion, storage, and detection pipelines must be modular enough to support peripheral workloads, such as possible MLops and serve layers in the future.

Surprisingly, this series scenario is fairly common within the Telecommunications, Heavy Manufacturing, Aerospace & Defense, Energy, Oil & Gas, Retail and the Financial sectors, as well as many companies who self-perform many of their SIEM workloads. A key difference in our model is that the data is interoperable. If designed correctly you reduce re-ingestion of the redundant data and transform the ingested data with a security focus.

Basic Layers of the Big Data SIEM

A Proven strategy is to compartmentalize the core of your modern SIEM architecture into four basic layers, which simplifies the design process, project planning and implementation, by providing distinct categories for each area of focus. This is different from standard SIEM solutions which are generally end-to-end solutions and often walled gardens. The major highlight of this environment and use case is that it can work in tandem with big data storage and ingestion already in use at your organization and can allow for augmentation and addition of smaller scope solutions to meet individual team needs vs ‘one size fits all’ for the security organization. We’ll cover how to use this design and how different security functions can operate within this model in later articles.

Though it may break the mold in a small way, we typically recommend viewing the architecture within the following layers while transitioning your SIEM to the public cloud:

- Ingestion: This layer is responsible for connecting data sources to the storage accounts in your public cloud. While planning this layer, remember that high performance designs are often the most simple, leveraging open-source technologies or methodologies, and passing through as few services as possible prior to landing in the appropriate storage container.

- Storage: While seemingly simple, there are many considerations for the technologies within this layer, given not only the throughput requirements of handling multiple petabytes per day, but also the efficient management of the resources themselves. Within this layer, you will be serving detection queries originating from the compute layer, performing Master Data Management (MDM), Data Lifecycle Management (DLM) and many analytic workloads.

- Detection: For our SIEM Modernization scenario with Azure, the detection layer consists of resources which allow us to run queries into the data stored. For Azure this includes resources such as Azure Data Explorer(ADX), Microsoft Sentinel, Azure Steam Analytics(ASA), and many third-party services. Typically seen as the Execution sub-layer of the Application cloud layer, we find that it makes sense to break this layer out, given the SIEM’s heavy focus on detection.

- Application: This layer consists of different cloud services and applications, which are divided into Execution and Application types. Within this layer, it’s common to see Azure Synapse Analytics, Azure Stream Analytics and PowerBI as well as Grafana, Kibana, Tableau or Incident management systems.

While some resources may be technically capable of bridging the gap between multiple or even all four layers, this line of thinking should be approached with extreme caution. Often, these miracle services will not only make for a less performant architecture, but your SIEM as a whole will enjoy less modularity (and thus, less flexibility) to adapt to future workloads without significant overhaul. We’ve often witnessed significant complexity being added to the overall solution due to using these wonder tools, or trying to patch the void that remains in the wake of the first invoice period. Our caution is there is no free lunch and we suggest leveraging cloud native and DevOps focused tools which have a large base of users and thus support.

It may not be a part of the original project scope (for any number of reasons), but designing and building within the four basic layers discussed above will allow for easy implementation of many additional layers — two of which are common offshoots from our original scenario:

- Big Data Analytics: With few exceptions, modern cyber security solutions and methodologies are largely driven by big data analysis. However, not all Azure resources are capable of supporting big data analytic workflows or pipelines. While difficult to perceive when it may seem far outside our original scope, the principles and resources we will recommend in this series (in particular: Storage) allows for secondary consumer groups of the ingested data, such as your data science teams.

- AI & MLops: Designing intelligent automation and assisted solutions to enhance your team’s capabilities is at the forefront of thinking for most organizational leadership. However and as with the preceding layer, special considerations must be made at the early phases of your architectural design in order to accommodate this type of work flow’s requirements.

Though seemingly different from each other and the main scenario; these three additional layers (and many more like it) have the same core requirements as the workflows within the modern SIEM as pertains to ETL, storage and compute layer architectural design. For the leadership team, this means that it is possible to design an architecture which is capable of facilitating the needs of multiple efforts.

Closing Thoughts

As we continue this series, we sincerely hope that our experience and opinion provides some tangible value and insight to you while exploring ways to take big data principles and use them within the security context. The Azure SIEM Modernization journey will be different between organizations, however the base principles will be similar. As we build out additional examples and articles using other cloud environments and hyperscalers, we hope the information will demonstrate methods that you can use to implement or mimic the ingestion, storage, compute (detection) and consumption (response/visualization) components for your use to make dealing with obscene amount of threat and log data more tolerable.

Please reach out if you have questions or wish to see more information on various points of what we have written on. If you’d like us to focus on certain areas that may help your unique situation please comment or reach out to Tyler G. (Tyler G. LinkedIN) or Tyson Barber (Tyson B. LinkedIn). We do not claim that a one size fits all solution is the answer but what we’ve designed and what we discuss is a modular and evolving framework which will allow you to attach legacy SIEM systems, Data Science, and AI driven management for security needs.